人的一生更是如此不要去看低每一个人,更不要以自己现有的权利去欺压别人。现在混得好不代表以后就混的好,时刻提醒自己你今天奋斗了吗?

以下包括链接中的分析皆是平时网上学习以及自己用到的一些知识,简单做个总结,基于版本2.27

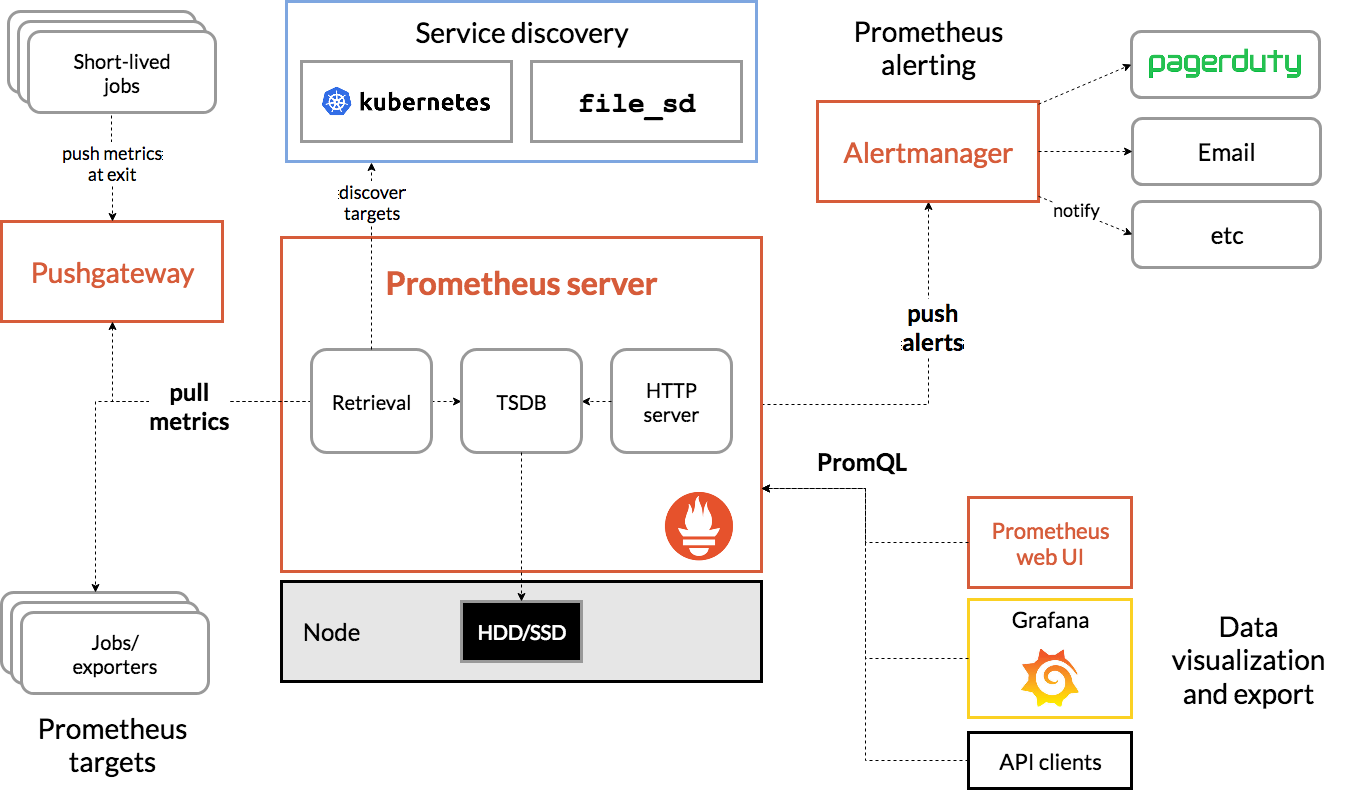

架构总览

Prometheus server 的核心功能模块是 HTTP server、TSDB 、服务发现和指标抓取

Prometheus 整个工作流程大概是这样的:

- 通过 Service discovery 知道要抓取什么指标

- 抓取指标数据存入 TSDB

- 客户通过 HTTP server 使用 PromQL 查询结果

主要工作流程(main.go)

- 设置命令行参数及其默认值和描述信息

- 解析启动命令的命令行参数为 cfg 实例

- 校验配置文件(–config.file 设置),默认是 prometheus.yml

- 打印 “Starting Prometheus” 和主机系统信息日志

- 初始化子任务对象

- 并发启动各个子任务

服务启动流程

- 接收 kill/web 终止信号,退出程序

- 启动 Scrape Discovery manager

- 启动 Notify Discovery manager

- 启动 Scrape manager

- 启动 Reload handler

- 初始加载配置

- 启动 Rule manager

- 初始化 TSDB

- 启动 Web server

- 启动 Notifier

服务预启动分析

Storage组件初始化

Prometheus的Storage组件是时序数据库,包含两个:localStorage和remoteStorage.localStorage当前版本指TSDB,用于对metrics的本地存储存储,remoteStorage用于metrics的远程存储,其中fanoutStorage作为localStorage和remoteStorage的读写代理服务器.初始化流程如下

1

2

3

4

5prometheus/cmd/prometheus/main.go

localStorage = &tsdb.ReadyStorage{} //本地存储

remoteStorage = remote.NewStorage(log.With(logger, "component", "remote"), //远端存储 localStorage.StartTime, time.Duration(cfg.RemoteFlushDeadline))

fanoutStorage = storage.NewFanout(logger, localStorage, remoteStorage) //读写代理服务器notifier 组件初始化

notifier组件用于发送告警信息给AlertManager,通过方法notifier.NewManager完成初始化

1

2

3prometheus/cmd/prometheus/main.go

notifierManager = notifier.NewManager(&cfg.notifier, log.With(logger, "component", "notifier"))discoveryManagerScrape组件初始化

discoveryManagerScrape组件用于服务发现,当前版本支持多种服务发现系统,比如kuberneters等,通过方法discovery.NewManager完成初始化

1

2

3prometheus/cmd/prometheus/main.go

discoveryManagerScrape = discovery.NewManager(ctxScrape, log.With(logger, "component", "discovery manager scrape"), discovery.Name("scrape"))discoveryManagerNotify组件初始化

discoveryManagerNotify组件用于告警通知服务发现,比如AlertManager服务.也是通过方法discovery.NewManager完成初始化,不同的是,discoveryManagerNotify服务于notify,而discoveryManagerScrape服务与scrape

1

2

3prometheus/cmd/prometheus/main.go

discoveryManagerNotify = discovery.NewManager(ctxNotify, log.With(logger, "component", "discovery manager notify"), discovery.Name("notify")scrapeManager组件初始化

scrapeManager组件利用discoveryManagerScrape组件发现的targets,抓取对应targets的所有metrics,并将抓取的metrics存储到fanoutStorage中,通过方法scrape.NewManager完成初始化

1

2

3prometheus/cmd/prometheus/main.go

scrapeManager = scrape.NewManager(log.With(logger, "component", "scrape manager"), fanoutStorage)queryEngine组件

queryEngine组件用于rules查询和计算,通过方法promql.NewEngine完成初始化

1

2

3

4

5

6

7

8

9

10prometheus/cmd/prometheus/main.go

opts = promql.EngineOpts{

Logger: log.With(logger, "component", "query engine"),

Reg: prometheus.DefaultRegisterer,

MaxConcurrent: cfg.queryConcurrency, //最大并发查询个数

MaxSamples: cfg.queryMaxSamples,

Timeout: time.Duration(cfg.queryTimeout), //查询超时时间

}

queryEngine = promql.NewEngine(opts)ruleManager组件初始化

ruleManager组件通过方法rules.NewManager完成初始化.其中rules.NewManager的参数涉及多个组件:存储,queryEngine和notifier,整个流程包含rule计算和发送告警

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15prometheus/cmd/prometheus/main.go

ruleManager = rules.NewManager(&rules.ManagerOptions{

Appendable: fanoutStorage, //存储器

TSDB: localStorage, //本地时序数据库TSDB

QueryFunc: rules.EngineQueryFunc(queryEngine, fanoutStorage), //rules计算

NotifyFunc: sendAlerts(notifierManager, cfg.web.ExternalURL.String()), //告警通知

Context: ctxRule, //用于控制ruleManager组件的协程

ExternalURL: cfg.web.ExternalURL, //通过Web对外开放的URL

Registerer: prometheus.DefaultRegisterer,

Logger: log.With(logger, "component", "rule manager"),

OutageTolerance: time.Duration(cfg.outageTolerance), //当prometheus重启时,保持alert状态(https://ganeshvernekar.com/gsoc-2018/persist-for-state/)

ForGracePeriod: time.Duration(cfg.forGracePeriod),

ResendDelay: time.Duration(cfg.resendDelay),

}Web组件初始化

Web组件用于为Storage组件,queryEngine组件,scrapeManager组件, ruleManager组件和notifier 组件提供外部HTTP访问方式,初始化代码如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24prometheus/cmd/prometheus/main.go

cfg.web.Context = ctxWeb

cfg.web.TSDB = localStorage.Get

cfg.web.Storage = fanoutStorage

cfg.web.QueryEngine = queryEngine

cfg.web.ScrapeManager = scrapeManager

cfg.web.RuleManager = ruleManager

cfg.web.Notifier = notifierManager

cfg.web.Version = &web.PrometheusVersion{

Version: version.Version,

Revision: version.Revision,

Branch: version.Branch,

BuildUser: version.BuildUser,

BuildDate: version.BuildDate,

GoVersion: version.GoVersion,

}

cfg.web.Flags = map[string]string{}

// Depends on cfg.web.ScrapeManager so needs to be after cfg.web.ScrapeManager = scrapeManager

webHandler := web.New(log.With(logger, "component", "web"), &cfg.web)

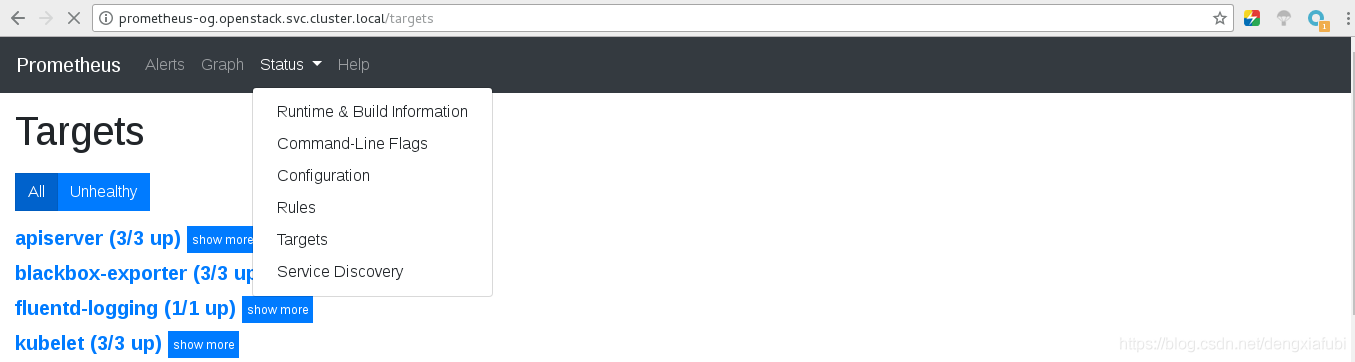

以上几个服务组件在Web页面对外的访问如图所示

服务配置分析

可以发现,除了服务组件ruleManager用的方法是Update,其他服务组件的在匿名函数中通过各自的ApplyConfig方法,实现配置的管理

1 | prometheus/cmd/prometheus/main.go |

其中,服务组件remoteStorage,webHandler,notifierManager和ScrapeManager的ApplyConfig方法,参数cfg *config.Config中传递的配置文件,是整个文件prometheus.yml,点击prometheus.yml查看一个完整的配置文件示例

1 | prometheus/scrape/manager.go |

而服务组件discoveryManagerScrape和discoveryManagerNotify的Appliconfig方法,参数中传递的配置文件,是文件中的一个Section

1 | prometheus/discovery/manager.go |

所以,需要利用匿名函数提前处理下,取出对应的Section

1 | prometheus/cmd/prometheus/main.go |

服务组件ruleManager,在匿名函数中提取出Section:rule_files

1 | prometheus/cmd/prometheus/main.go |

利用该组件内置的Update方法完成配置管理

1 | prometheus/rules/manager.go |

最后,通过reloadConfig方法,加载各个服务组件的配置项

1 | prometheus/cmd/prometheus/main.go |

服务启动分析

这里引用了github.com/oklog/oklog/pkg/group包,实例化一个对象g,包详解

1 | prometheus/cmd/prometheus/main.go |

对象g中包含各个服务组件的入口,通过调用Add方法把把这些入口添加到对象g中,以组件scrapeManager为例:

1 | prometheus/cmd/prometheus/main.go |

通过对象g,调用方法run,启动所有服务组件

1 | prometheus/cmd/prometheus/main.go |

启动完成。

main 函数注解

prometheus/cmd/prometheus/main.go

1 | // Copyright 2015 The Prometheus Authors |

参考:

https://blog.csdn.net/dengxiafubi/article/details/102845639

https://so.csdn.net/so/search?q=Prometheus%E6%BA%90%E7%A0%81%E5%AD%A6%E4%B9%A0&t=blog&u=qq_35753140